Читать книгу The Riddle of Malnutrition - Jennifer Tappan - Страница 11

На сайте Литреса книга снята с продажи.

ОглавлениеINTRODUCTION

The riddle of malnutrition, which proved puzzling to health workers in the East African country of Uganda, as in other world regions, concerned the syndrome now known as severe acute malnutrition. Severe acute malnutrition is the most serious and most fatal form of childhood malnutrition. Global estimates in the early twenty-first century indicate that the condition annually affects between ten and nineteen million children, with over five hundred thousand dying before they reach their fifth birthday.1 The condition was first recognized, as a form of protein deficiency known as kwashiorkor, in the mid-twentieth century and for a time was a central international concern.2 Severe acute malnutrition is currently defined in fairly simple terms, but is far from a simple condition. Children who exhibit “severe wasting” or a weight-for-height ratio that is less than 70 percent of the average for their age are seen to be suffering from severe acute malnutrition. Alternative markers include nutritional edema or very low mid-upper arm circumference measurements. Children diagnosed as severely malnourished require immediate therapy and run a very high risk of succumbing to the condition.3 What is more, recent investigations suggest that even those who do survive appear to suffer from long-term impacts on their overall growth and development.4

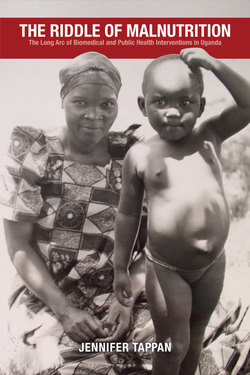

Until the late twentieth century, the condition now diagnosed as severe acute malnutrition, or SAM, was thought to be two entirely separate syndromes. Kwashiorkor and marasmus, which are now recognized as extreme manifestations of the same condition, occupy opposing ends along a spectrum of severe malnutrition. Marasmus is defined as undernutrition or frank starvation with the extreme and highly visible wasting of both muscle and fat (see fig I.1). Kwashiorkor, on the other hand, is seen as a form of malnutrition and although the specific cause or set of causal factors that lead to kwashiorkor remain uncertain, kwashiorkor came to be associated with a diet deficient in protein.5 In sharp contrast with the very thin appearance of children suffering from marasmus, the most important and consistent symptom of kwashiorkor is edema, or an accumulation of fluid in the tissues, which gives severely malnourished children a swollen and plump, rather than starving, appearance (see fig I.2). This telltale swelling is exacerbated by an extensive fatty buildup beneath the skin and in the liver, and these symptoms long confounded biomedical efforts to understand the condition and connect it to poor nutritional health. Many children with kwashiorkor also develop a form of dermatosis, or rash, in which the skin simply peels away, and they often lose the pigment in their hair. One of the most distressing aspects of the condition is the extent to which children with kwashiorkor suffer. They are visibly miserable, apathetic, and anorexic.

FIGURE I.1. Marasmus. Source: D. B. Jelliffe and R. F. A. Dean, “Protein-Calorie Malnutrition in Early Childhood (Practical Notes),” Journal of Tropical Pediatrics, December 1959, 96–106, by permission of Oxford University Press.

The refusal to eat further exacerbates and contributes to an impaired ability to digest food, increasing the high mortality rates associated with severe acute malnutrition, and especially children suffering from kwashiorkor. Prior to the 1950s, case fatality rates in Africa ranged widely but were frequently cited as high as 75 and even 90 percent. Although these mortality rates fell considerably when new therapies were developed, they remained at unacceptably high global rates of between 20 and 30 percent until the twenty-first century. A new set of therapeutic protocols promises to reduce the associated mortality by more than half, but has only inconsistently achieved such rates of recovery and survival. Severely malnourished children who are infected with HIV experience the highest case fatality rates, which may have been a factor contributing to the mortality associated with the condition long before the discovery of HIV in the 1980s.6 Moreover, severe acute malnutrition, like undernutrition more broadly, cannot be entirely separated from other forms of debility and disease, as poor nutritional health significantly increases the morbidity and mortality of a wide range of infections including HIV. Despite the “synergistic association” between undernutrition and disease, poor nutritional health is considered the cause of death only when recovery and survival are specifically compromised by the presence of malnutrition. On this basis, global estimates indicate that, taken together, various forms of undernutrition accounted for over three million deaths in children under the age of five in 2011—a figure encompassing an astonishing 45 percent of worldwide infant and child mortality.7 The relatively small fraction of under-five mortality that is directly attributed to severe acute malnutrition alone—estimated in 2011 to be approximately 7.4 percent—nonetheless represents more than five hundred thousand children, a death toll on par with malaria.8

FIGURE I.2. Kwashiorkor. Courtesy of Paget Stanfield.

Like malaria, the prevalence of severe acute malnutrition is concentrated in particular world regions.9 The overall global prevalence was estimated in 2011 to be approximately 3 percent, which roughly equates to nineteen million children, with the highest prevalence rates found in central Africa where an estimated 5.6 percent are severely malnourished. Global indications suggest that the prevalence of less severe forms of childhood malnutrition decreased since the 1990s, with Africa as the only exception.10 Evidence from Uganda, where a 2010 survey found that 2 percent of children were severely malnourished and 6 percent showed signs of less severe wasting, corroborates this trend.11 In the mid-twentieth century, annual prevalence in Uganda was estimated at 1 percent, although statistics from the Ugandan Ministry of Health indicate that, based on the twenty-three thousand to thirty-six thousand children annually diagnosed with malnutrition between 1961 and 1966, the prevalence may have been closer to between 2 and 3 percent.12 Establishing even estimates of historical prevalence rates must confront a number of significant challenges. Prior to the 1950s, kwashiorkor was not widely recognized as a condition, and in Uganda, biomedical practitioners later equated a number of different locally recognized forms of illness as kwashiorkor.13 What is more, prevalence has often been assumed to be more or less static, meaning that, until fairly recently and except when assessing the success of specific interventions, little effort was made to investigate the shifting epidemiology of severe acute malnutrition.14 The result is that we are left with the knowledge that severe acute malnutrition remains a serious problem in many parts of the world, but have, at best, an incomplete understanding of how prevalence may have shifted over time. This gap in existing knowledge limits efforts to consider the role of contributing factors, including economic and social variables.15

In the postwar development era, when betterment schemes promised to lift entire populations out of poverty, severe acute malnutrition in Africa and other global regions did become a central international concern.16 It not only occupied the attention of biomedical experts and nutritionists, but, due in large part to the jarring images of severely malnourished children that Time Life Magazine published in 1968—photos of children from the refugee camps of Nigeria’s Biafra War—the condition also became the poster child for humanitarian aid and volunteerism. It became emblematic of one of the most pervasive tropes of the African continent: that of the starving African child. The Nigerian novelist Chimamanda Ngozi Adichie’s poetic critique of the fleeting philanthropy that such imagery inspires—“Did you see? Did you feel sorry briefly . . . ?”—speaks to the waxing and waning attention, and attendant funding, for such global health concerns.17 The fluctuating international interest and investment in the problem of severe acute malnutrition reveal the role of biomedical research and programming in global health faddism. International interest in severe acute malnutrition has proven to be fleeting, and as global concern waned in the late twentieth century, past efforts to combat the condition were swept under the rug, all but forgotten. Galvanized by therapeutic innovations, a new generation of global health workers, biomedical experts, and philanthropists has succeeded in returning severe acute malnutrition to the international limelight. Without knowledge of prior initiatives, their work cannot build on previous endeavors or avoid past mistakes.

Enduring Engagements

One of the most important facets of nutritional research and programming in Uganda is that it represents a largely uninterrupted effort to understand and contend with a single condition for the better part of a century. This is remarkable because many, if not most, biomedical interventions in Africa from the colonial period onward have been time-limited. Public health projects and programs with end dates seek to make a lasting impact through temporary measures, and these measures are seen to either succeed or fail. Historians of colonial medicine and global health have, especially in recent years, drawn attention to the many insights that emerge from an examination of these targeted endeavors. A key observation is that Africans on the receiving end of health-related work are not merely passive recipients or biomedical subjects. Instead, they interpret and make meaning of health provision in ways that are tied to a complex set of local, and very often historical and thus dynamic, perceptions and experiences.18 It has been shown that interpretations of both research and programming shape how people engage with biomedical projects and programs, how they are incorporated and thereby altered, or rejected and avoided.19 The result has very often been a number of unintended consequences that typically impede stated objectives. Among scientists and public health experts, failure signals the need to return to the drawing board in order to devise better tools and methods, and in the global health context this typically involves packing up and returning to North Atlantic centers of research and policy development. Prior public health programming, especially when unsuccessful, is frequently then forgotten, leaving the lessons of past mistakes sadly out of reach for those launching at times nearly identical initiatives at a later date.20 Yet, when these interventions come to the end of their time frame, they leave a trail of data and reports for later analysis and what historian Melissa Graboyes has recently referred to as “accumulated reflections.” Interpretations and perceptions of medical endeavors accumulate among populations in Africa and elsewhere, and the residue of these past experiences continues to influence their view of and response to future efforts for perhaps decades if not generations to come.21

Recognizing that health-related work leaves a residue of past interactions and encounters and that even time-limited projects have a social afterlife illustrates one of the fundamental shortcomings of neglecting historical epidemiology.22 Assuming that recipient populations are like blank slates in their perceptions of biomedical work overlooks how past experiences and programs influence future initiatives. While communities in Africa may at times be “biological blank slates,” and thus represent unparalleled opportunities for testing new vaccines and drugs, their interpretation of and engagement with such work filters through the residue of past medical projects and programs.23 The long history of nutrition research and programming in Uganda is an opportunity to examine how perceptions of biomedical research and treatment shaped the outcome of such endeavors. My analysis explores how the residue of past medical work fundamentally influenced how people engaged with biomedicine and public health. It asks how the residue of past experiences thereby influenced health-related research and programming. In tracing this influence it also reveals the dynamism inherent in local interpretations and interactions. Even “accumulated reflections” are open to change and, as nutritional work in Uganda shows, were highly responsive to the shifting research protocols and evolving programs of treatment and prevention that they themselves engendered.24

The nutritional work conducted in Uganda for the better part of a century challenges common definitions of global health. According to a recent volume titled Global Health in Africa, global health has its origins in colonial times, but emerged in the post–World War II period and can be defined “broadly to refer to the health initiatives launched within Africa by actors based outside of the continent.”25 Yet, in addition to the ways that local interactions influenced nutritional work in Uganda throughout the period under consideration, it is also true that Ugandans were pivotal to the nutrition research and especially the later programming that are at the center of this study. In fact, both biomedical training and infrastructure in Uganda proved crucial to the evolution and longevity of a public health program that continues to be of great significance to many Ugandans. The public health approach that emerged in Uganda was far more of a local endeavor than it was a global health initiative.26 When initial efforts failed, biomedical personnel returned to the drawing board, but it was a drawing board in Uganda. The research protocols and initiatives that were then devised explicitly harnessed local engagement with biomedicine—they put enduring engagements in the service of public health. In fact, the period of failure that preceded the advent of this novel public health approach was one marked by Uganda’s emergence as an international center of nutrition science and the post–World War II rise of global health.27 In launching Africa’s first nutrition rehabilitation program, the expatriate architects of the initiative saw the errors of existing global health models and devised a new one.

Mulago and the Kingdom of Buganda

The Mulago medical complex has long been the locus of biomedical research and provision in Uganda, and Mulago together with the region surrounding one rural health center constitute the two principal sites of fieldwork for this study. Mulago is one of the many hills that define the landscape of Uganda’s contemporary capital city, Kampala. This urban center has also long been the political capital of Buganda, one of the numerous interlacustrine kingdoms that dominated this region of East-Central Africa prior to colonial imposition.28 Stretching like a fertile crescent across the northwestern shores of Africa’s largest lake, Buganda spans from the Nile River in the east to the Kagera River in the southwest. The kingdom’s controversial northern boundary was extended under British suzerainty to the Kafu River, finalizing a centuries-long process of territorial expansion and regional ascension (see map I.1).29 European explorers and missionaries had been present in Buganda, alongside coastal merchants, since the mid-nineteenth century, but the British did not become actively involved in Buganda’s political affairs until the late 1880s. Through amicable relations and a strategic alliance formalized with the British in 1894, Buganda and the port town of Entebbe became the political and economic headquarters of the British protectorate. Ganda participation in the pacification and administration of other areas within present-day Uganda placed Ganda in an advantageous position and created a divisive context with significant consequences following independence.30

The British were impressed by what they saw as an exceptional example of a progressive and sophisticated state in the heart of tropical Africa, and sought to govern indirectly through Buganda’s highly centralized and bureaucratic structure of chiefs and royal officials. An agreement signed in 1900 established, for the kabaka (king) and the reigning Ganda chiefs, a degree of political autonomy and, notably, freehold rights to virtually all of the productive land in Buganda. Parceled out in estates so vast that they were measured in miles (and became known as mailo), land in Buganda was transformed into the private property of what then became an oligarchy of Ganda chiefs.31 Even as it increased the power of chiefs vis-à-vis ordinary Ganda, this agreement kept Uganda from becoming a settler colony. Ugandans were able to thereby avoid the fate of those in neighboring Kenya and Southern Africa, where, by contrast, land alienation left Africans to subsist on the diminishing resources of overcrowded Native Reserves or as squatters and tenants on white-owned farms. The agreement, together with the completion of the Uganda Railway in 1901, set the stage for the rapid development of a flourishing export-oriented cash-crop economy, based initially on the small-scale peasant production of cotton and later on the far more lucrative cultivation of coffee. For average Ganda, most other avenues of upward mobility were effectively blocked by the Indian and expatriate monopoly on the processing and marketing sectors of the Ugandan economy.32

As the commercial and administrative center of the British Protectorate, Buganda was also the hub of both government and missionary education and medical provision. Albert Cook of the Church Missionary Society (CMS) established the largest and most successful medical mission station in East Africa on a hill not far from the capital or kibuga of Buganda. As in other regions of the continent, education and medical services for African populations were initially the sole purview of the missionaries.33 Particularly in the wake of a devastating sleeping sickness epidemic and concerns over demographic decline linked to venereal infections, the colonial government did eventually begin providing medical services and training medical auxiliaries.34 The very high standards of medical training achieved at Mulago transformed the associated vocational school into a major research university. By the late colonial period, the Mulago medical complex was a center of research and training, drawing the best students from Uganda, Kenya, present-day Tanzania, and other regions of the continent.35 It was this strong foundation of medical training that made Uganda a site of cutting-edge biomedical research—research initially focused on understanding and treating severe acute malnutrition.

MAP I.1. Uganda. Map by Shawna Miller.

The Riddle of Malnutrition traces longstanding efforts to understand, treat, and prevent severe acute malnutrition. These efforts initially served to medicalize the condition in the eyes of both biomedical personnel and the Ugandans who brought their severely malnourished children to the hospital for treatment and care. Medicalization meant that the condition came to be seen as a disease and a medical emergency.36 My analysis explores how this understanding of the condition undermined prevention with unintended consequences, further imperiling the health and welfare of young children in Uganda. Biomedical personnel responded to the failure of prevention by launching Africa’s first nutrition rehabilitation program. The program they designed aimed to demedicalize malnutrition, to learn from past mistakes, and it is one of the arguments of this book that the apparent efficacy and remarkable longevity of the nutrition rehabilitation program was the result of this critical reflection on the inadequacies of prior initiatives. Examining the perspective that was thereby gained reveals the immense value of historical epidemiology. It also shows how the advent of a novel public health approach to severe acute malnutrition built on Uganda’s strong foundation of biomedical training and expertise and local engagements with biomedical treatment and care. As the program evolved it became a truly local initiative with a lasting legacy in at least one part of Uganda and with, at one time, aspirations to become a national program promoting nutritional health among all Ugandan children. How such a program could be largely forgotten outside Uganda is also a part of this history, and the potential implications of this unwitting amnesia are considered in a final examination of how recent innovations may return us to an earlier era when a medicalized approach compromised nutritional health in Uganda. This study is written in part to try to break this cycle of neglecting past public health initiatives as a new generation works to devise and advocate for policies, technologies, and programs that promise a healthier and more secure future for people around the world.