Читать книгу Recalculating: Steve Chapman on a New Century - Steve Chapman - Страница 7

На сайте Литреса книга снята с продажи.

ОглавлениеGeographic snobbery at home in the news media

Sunday, January 30, 2000

It’s well-known among journalists that across this broad land, people dislike the news media. Why those of us who spend our working lives fearlessly bringing the truth to the American people should be met with such ingratitude is a mystery. But the average person tends to think of journalists as arrogant, insular and condescending. I can think of only one obvious explanation for this perception: In many cases, it’s true.

This will not come as breaking news to Southerners, who are used to being lampooned in the national press as snaggle-toothed bumpkins prone to marrying their underage cousins and keeping rusted-out jalopies in the front yard. The arrival of out-of-state reporters in Atlanta for the Super Bowl provided the occasion for some of them to wax scornful about the city’s countless shortcomings.

USA Today sports columnist Jon Saraceno, who is to humor what Mike Ditka is to self-control, felt the need to joke about his dissatisfaction with the host city, whose enumerated sins include John Rocker, Ted Turner, bad traffic and the bomb that went off at the 1996 Summer Olympics. In an effort to be fair, he did express regret that Tampa Bay didn’t make it to the big game so we could have had “our first Bubba Bowl. . . . For all the grits.”

Saraceno also had to acknowledge that he had once bought a truck in Atlanta, and “it didn’t have a gun rack, a CB radio or tobacco juice splattered all over the dash.” Gee, you think he got a discount?

But that one tolerable experience was not enough to justify the NFL’s inexplicable decision to play the Super Bowl in the Georgia Dome: “Atlanta has hosted only one previous Super Bowl. Personally speaking, I could’ve waited until Y3K for the second.”

Saraceno should commiserate with New York Times foreign affairs columnist Thomas Friedman, whose snobbery toward the Southern provinces is even more pronounced. After North Carolina Republican Sen. Jesse Helms spoke recently at the United Nations, Friedman was sputtering with outrage. “Who authorized him to speak for America?” he demanded. “Why don’t they send Jed Clampett from ‘The Beverly Hillbillies’ to the UN too, so we can hear what he has to say about foreign policy?”

Maybe Friedman, who seems more at home in Jakarta than in Jacksonville, has been spending too much time abroad. Jesse Helms has some authority to speak on international affairs because he happens to be chairman of the Senate Foreign Relations Committee. And though his arch-conservative political views put him badly out of step with The New York Times’ editorial page, this hillbilly has not been too dimwitted to be elected to the Senate five times by the people of North Carolina.

If Friedman has never journeyed to that trackless waste, he can read up on it in the latest edition of “The Almanac of American Politics,” whose editors (Michael Barone and Grant Ujifusa) are card-carrying members of the New York-Washington elite. They describe it as “one of the leading-edge parts of nation, a state whose growing economy, booming demography and vibrant culture are in many ways typical of the way the nation is going.” In fact, North Carolina has made so much dadgum progress that Jed Clampett would barely recognize the place.

The South, however, is not alone in generating derision from ultrasophisticates in the news media. Every four years, Iowans get a lot of attention from the national press corps — along with more than their share of jibes, sneers and patronizing pats on the heads for being so earnest, rural, white-bread and dull.

The day after last week’s presidential caucuses, Boston Globe columnist David Nyhans addressed the Hawkeye State dismissively: “You had your fling, it’s over, and now as you slip back into somnolence, take solace knowing you get more bites at the apple than most citizens elsewhere.” Somnolence? It’s kind of him to let those Americans located west of the Hub of the Universe know that they’re not dead — just sleeping.

What else could they be doing without the diversions of the Northeast Corridor? New York Times columnist Gail Collins ventured into the American Siberia to find out why anyone would show up to hear a campaign speech by Steve Forbes and left with the mystery not quite solved: “It could just be,” she finally concluded, “that people who live in Iowa in the winter have a lot of time on their hands. ‘It was either come here or go to exercise class,’ one elderly woman said.”

Atlanta Braves pitcher John Rocker got in a lot of trouble for his hostile caricature of New Yorkers, which relied mostly on gross stereotypes of millions of people he has obviously never tried to know or understand. He could be finished in baseball, but maybe there’s a future for him in journalism.

The GOP should sit back and savor its victory

Thursday, February 10, 2000

It’s 1975. South Vietnam has just fallen to communism, inflation is surging, the federal budget is deep in the red, the economy is in a straitjacket of wage and price controls, socialism is in vogue among young people, crime is rampant, taxes are painfully high and government is growing ever bigger. For conservatives, libertarians and other advocates of limited government, the present is grim and the future looks worse.

Suppose back then, a visitor from the future had arrived to report on the state of America in the year 2000: Communism is dead, the United States is the world’s only superpower, socialism has been discredited, inflation has been vanquished, the free market reigns, crime is ebbing, the federal budget enjoys a fat surplus, tax rates are down and federal spending is declining. And all this under a Democratic president!

What would anti-government types have said then if they’d known what lay ahead? They’d have rejoiced: “We don’t have to die to go to heaven; we just have to wait till the turn of the millennium.”

But here we are 25 years later, and liberals are the only people who feel they’ve reached the promised land. A few decades from now, history may remember Bill Clinton as one of the most successful Republicans ever to occupy the White House.

For the moment, though, Clinton’s critics persist in feeling deeply dissatisfied, not because the world is still going to hell in a handbasket, but because Democrats are still in power, pushing many liberal ideas and getting all sorts of credit they don’t deserve.

Some of their complaints are understandable. It was the tight money policy of Alan Greenspan, a disciple of “Atlas Shrugged” author Ayn Rand, that laid the foundation for the longest economic expansion in American history. It was a Republican president who carried out much of the tax-cutting and deregulation now powering the engine of growth. It was a GOP-controlled Congress that forced Clinton to agree to a plan that produced the current budget surplus.

While taking credit for policies that he had nothing to do with, didn’t originate or adopted only under duress, Clinton also continues to press for new spending programs — $126 billion worth in last week’s State of the Union address, which came only five years after he declared the end of the era of big government.

But no one expects him to get most of the goodies he wants to pass out. And whatever his budget fantasies may be, the era of big government really is pretty much over. Even with swelling surpluses, the Congressional Budget Office projects that federal taxes will take a slightly smaller share of our income in coming years than it takes now.

On the expenditure side, the CBO says that even if federal discretionary spending grows as fast as the rate of inflation after this year (which is more than it would under existing policies), federal spending will drop from 18.5 percent of national income today to just 16.5 percent in 2010. The last time federal outlays were that low was 1958. Ronald Reagan never got spending below 21.5 percent of gross domestic product.

No one could have ever predicted that a Democratic president someday would boast about his plan to completely pay off the national debt — something not done since 1835. Until Reagan came along, liberals laughed at anyone who worried about such matters. But years of opposing tax cuts have conditioned Democrats to extol fiscal discipline as the highest possible virtue. Al Gore promises to continue running surpluses even if the economy stalls — a shift on the order of the pope endorsing premarital sex.

Some liberals have noticed the reversal and don’t like it one bit. Former Labor Secretary Robert Reich castigates Clinton and other Democrats for “sounding like Coolidge Republicans” because they are “spooked by the possibility that voters will be attracted to ambitious Republican tax-cutting plans in the fall, and they haven’t the confidence to build public support for an equally ambitious program centered on education and health care.”

He’s right. Democrats may propose small programs, but they are leery of big ones. Why? Because the party learned the hard way that Americans are skeptical of expansive government and don’t trust Washington to spend their money wisely. They would rather see taxes go to retiring the debt, a project that may do some good and can hardly do any harm, than fund a federal spending spree that may be useless or worse.

Judging against where the nation was a quarter-century ago, opponents of leviathan have largely carried the day. Instead of lamenting Clinton’s success, they might take it for what it is: their victory.

And now, fans, here are the Arena Football results: Anaheim beat Toronto 16-10, Chicago

High scores should give offense to baseball fans

Something needs to be done to rescue the game

Thursday, May 11, 2000

And now, fans, here are the Arena Football results: Anaheim beat Toronto 16-10, Chicago topped Seattle 18-11, San Francisco edged Cincinnati 13-9, Florida got by Pittsburgh 12-5, and . . . What’s that? I’m sorry. Those scores are not from the Arena Football League. They’re from the Arena Baseball League.

Of course, Major League Baseball hasn’t adopted the name yet, but the handwriting is on — make that over — the wall. The thinking is that you can never have too much offense. Home runs have been flying like airliners out of O’Hare: in large numbers and at a nonstop pace.

Monday night, Oakland’s Jason and Jeremy Giambi both cleared the fences, making them the first brothers to homer in the same game since — well, since last October, when Vladimir and Wilton Guerrero did it for Montreal. In the entire history of Major League Baseball, how many pairs of brothers had previously attained that distinction? Three.

What the headlines suggest, the box scores confirm. In April, a total of 931 balls reached the seats — breaking the record for the month and exceeding last April’s total by 140.

In the first five weeks of the 2000 season, teams played 457 games, during which they averaged nearly 11 runs a game and 2.59 home runs. In the first 457 games of the 1992 season, by contrast, teams managed just 8.39 runs and 1.45 homers per game. In eight brief years, total scoring has risen by 28 percent and long-distance connections are up by a whopping 79 percent. And we’re not even into warm weather, when hitters really get cooking.

Some of the reasons for the offensive surge are obvious, and some are in dispute. Hitters claim they are just bigger, stronger and better than hitters of old, thanks to advanced nutrition and hundreds of off-season hours spent lifting weights, instead of fishing or selling insurance as their forebears did.

There is something to this argument: Joe DiMaggio, who won two home run titles, was a willowy 6 feet 2 inches and 193 pounds, which these days is a good size for a patent lawyer. Mark McGwire goes 6 feet 5 inches and 250 and is large enough to moonlight in rodeo bull-riding — as the bull.

Expansion has made life easier for McGwire and other hitters by letting them face undertalented pitchers who otherwise would be riding buses in the minors. In the struggle to fill pitching staffs, major league teams have become about as selective as a 1st-grade talent show. Baltimore currently has four pitchers with an earned run average of 9.00 or more. Toronto has three with ERAs above 11. In the old days, an ERA of 9.00 would get you a job driving a truck.

New fields have also been kind to hitters. The cavernous Houston Astrodome, where home runs went to die, has been replaced with Enron Field, which measures just 315 feet down the right field foul line and 326 feet in left — reasonable dimensions for a high school stadium.

Hitting a baseball 315 feet used to be good for a single, if you were lucky. Letting a team put the fences that close makes about as much sense as letting a team put the pitcher’s rubber 55 feet from the plate.

And let’s not even talk about Colorado’s Coors Field, where thin, dry air and weak gravity combine to turn pop-ups into homers and pitchers into quivering wrecks. During the 1998 All-Star game there, the best moundsmen in the National League wore themselves out throwing 214 pitches and giving up 13 runs.

But pitchers insist that much of the problem lies with a turbo-charged ball, and no one this side of Bud Selig doubts it. Even Atlanta’s peerless Greg Maddux says the current ball is so hard it should have a Titleist stamp on it.

If the offensive boom is due to good hitters and weak pitchers, why did 71 players strike out 100 times or more last season? After 35 games this year, Sammy Sosa has as many strikeouts as Joe DiMaggio ever had in an entire season.

What can be done? The ball can have a little of the juice taken out, fences can be placed farther from home plate, the strike zone can be expanded beyond postage-stamp size, and batters can be barred from wearing all that plastic armor on their forearms and elbows, which makes them impervious to inside pitches. It also wouldn’t hurt to eliminate a few teams in smaller markets, thus freeing a lot of guys to pitch in Sunday morning softball leagues.

Nobody wants to return to 1915, when Braggo Roth won the American League home-run title with 7. But something needs to be done to rescue a game that should be more than longball. Arena football isn’t true football, and baseball fans may eventually conclude that this new version of their game should be outta here.

The Great Emancipator

Or was Abraham Lincoln actually a white oppressor?

Sunday, May 14, 2000

Millions of admiring words have been written about Abraham Lincoln, but not one of them was composed by Lerone Bennett Jr. Bennett is executive editor of Ebony magazine and author of an incendiary new book, “Forced Into Glory: Abraham Lincoln’s White Dream,” which depicts the 16th president as an oppressor, a supporter of slavery and the relentless enemy of black equality. Racism, says Bennett, was “the center and circumference of his being.” Next to Bennett’s version of Lincoln, David Duke looks good.

Despite its provocative thesis, the book has been a well-kept secret, most likely because it comes from the obscure Johnson Publishing Co. Time magazine columnist Jack E. White complains that “Forced Into Glory” is “not getting the kind of attention non-fiction works by white authors have received.” To address our racial issues, he says, we need “to stop ignoring Bennett’s discomfiting book.”

The book is useful because every generation ought to re-examine the received assumptions of our political culture. Bennett’s portrayal will surprise both whites and blacks raised to revere the Great Emancipator. But this massive exercise in demonization fails because it misunderstands both Lincoln and his era.

At a superficial level, Lincoln harbored many of the racial prejudices of the time. He grew up in a society where black inferiority was assumed, and he was given to racial jokes and even use of the “N” word. Before becoming president, he said he didn’t favor racial equality. As president, he insisted that “if I could save the Union without freeing any slave, I would do it.”

But Lincoln’s racial attitudes evolved as he grew older — to the point that Frederick Douglass said he was “the first great man that I talked with in the United States freely, who in no single instance reminded me of the difference between himself and myself, of the difference of color.”

Bennett accuses Lincoln of caring nothing about the plight of blacks, but the truth is that Lincoln took the position throughout his career that slavery was a “monstrous injustice.” The important thing is not that Lincoln was free of racial prejudice, but that he could rise above it to oppose slavery and work toward its extinction.

Part of the fraud Bennett ascribes to Lincoln is the Emancipation Proclamation, which he says did not free any slaves. It’s hardly news that the decree applied only to areas under rebel control — where Lincoln’s writ did not run. But it did make clear to everyone, North and South, that the war was henceforth a war not only to save the Union but to end slavery.

It was cheered by abolitionists despite its limitations, while being bitterly denounced by pro-slavery leaders, who saw it as an invitation to slave revolt. Lincoln didn’t stop with this: He pushed for a constitutional amendment to abolish the institution everywhere, which he lacked the legal authority to do by himself.

If Lincoln had pushed harder and earlier for abolition, he might have satisfied Bennett. But, says Princeton historian James McPherson, he would have shattered support for the war, with the result that “the Confederacy would have established its independence, and with 14 states instead of 11.” And slavery would have survived.

His proposal to resettle blacks abroad is reviled by Bennett as “the ethnic cleansing of America.” Lincoln did support the idea, not because he hated blacks but because he knew the depths of racism among whites. If blacks couldn’t gain equality here, he reasoned, might they not be better off in a homeland of their own? And wouldn’t that prospect defuse white support for slavery? Lincoln eventually abandoned this option, though, and set about trying to create a society where blacks could live in freedom.

He pursued his goals with a political skill and cunning that often confused his friends as well as his enemies. But pursue them he did, without cease. Said Frederick Douglass, himself a former slave, “Viewed from the genuine abolition ground, Mr. Lincoln seemed tardy, cold, dull and indifferent; but measuring him by the sentiment of his country, a sentiment he was bound as a statesman to consult, he was swift, zealous, radical and determined.”

Lincoln didn’t have to embark on a war to put down the rebellion of the slave states, but he did. He didn’t have to accept the deaths of 360,000 Union soldiers in the effort, but he did. He could have made peace with the rebels and left the slaves to their fate in a Confederate States of America, but he refused.

Instead, Lincoln never veered from a painful, costly and bitter course aimed at both preserving the republic and ridding it of slavery. And in the end, he succeeded, to the everlasting benefit of both races. If those achievements were the work of an enemy, black Americans would not need friends.

Perpetuating a useless dynasty

Thursday, June 22, 2000

He’s a photogenic and well-educated but conspicuously unaccomplished guy who seems to think he’s entitled to high office simply because of his name. No, I’m not talking about George W. Bush or Al Gore. I’m talking about Prince William, who has gotten a torrent of publicity for reaching his 18th birthday, an achievement within the ability of most people on Earth.

Well, that’s not the whole reason he’s getting all this attention. There’s also the fact that he’s a member of the British royal family, son of Prince Charles and the late Princess Diana and, if things go as planned, the future King William V. Thanks to these attributes, plus his money and good looks, he is now suddenly regarded as “the world’s most eligible bachelor,” “a global superstar,” and a suitable subject for intensive press coverage.

But why? The most you can say about William is that in 30 or 40 or 50 years, if he’s lucky, he will inherit the throne of a pathetically irrelevant monarchy in a small island nation. In the meantime, he will wait for his grandmother and then his father to live out their allotted days and vacate the job, whose prerogatives and duties have nothing to do with actually governing the country.

The British crown, once the terror of the world, is a fake monarchy that would cause Henry VIII to chop off his own head in despair at how far his office has fallen. William could hardly have less actual power if his family were named Smith instead of Windsor. He has to do nothing to become king, which is perfect preparation for a job in which he will do nothing.

So what difference does it make that he’s now 18? Why, it means he may be addressed as “Your Royal Highness.” In other respects, Britons may be surprised to find that life will go on just as before. In fact, apart from living lavishly at public cost for his entire life, young William stands to have not the tiniest tangible impact on his subjects. Why should anyone in Britain care about him — much less anyone outside Britain?

Americans ought to make it a point not to care. In a couple of weeks, we will celebrate the 224th anniversary of the Declaration of Independence. Independence from what? From the British crown, that’s what. We fought a war for the inestimable privilege of not giving a rat’s bottom what is going on in the House of Windsor or any other British royal family, and I have good news: We won.

As a direct result, the future king of England has no more to do with us than the future king of Norway or the future grand duke of Luxembourg. Did you ever see the heir apparent to the grand duchy of Luxembourg on the cover of Newsweek?

But somehow the British royals are famous in spite of their insignificance. About the only work available to them is providing material for the tabloids, which they do through various sorts of scandalous and dysfunctional behavior. We are reliably informed that William has been known to take a drink and smoke the occasional cigarette, though he is believed to avoid drugs.

Given that limited information, it’s too early to tell if William will match his relatives’ record of promiscuity, mental defects and plain silliness. Luckily for Americans, we don’t have to worry, since we can always count on an endless parade of professional athletes, movie stars and Kennedys to supply news appealing to our baser instincts.

Two mysteries arise here. The first is why the British people continue to tolerate a monarchy that serves no useful purpose. It’s like carrying a mortgage on a mansion that you are not allowed to live in or rent out, only admire from a distance.

The other question is why members of the royal family participate in the charade. Being a princess obviously didn’t contribute to the happiness or well-being of William’s mother, and his father has squandered his life waiting for a job that no self-respecting person would want. The first Queen Elizabeth was a figure of great historical consequence. The second is the moral equivalent of a wax dummy.

William is being hailed as someone who could redeem the royal family in the eyes of the public. But the most valuable thing he could do is to renounce the whole absurd business and set about living something resembling a normal life.

A recent poll found that three out of four young people in Britain would rather live in a republic than a monarchy. William would be doing himself and everyone else a favor to say, “You know what? So would I.”

In search of a gasoline conspiracy

Sunday, July 9, 2000

Cheap gasoline has often been a hardy perennial of life in America, but it’s not actually a constitutional right. This has come as a shock to motorists and elected officials, both of whom were laboring under the impression that fuel prices, like avalanches, can only travel downward.

The scenes of outrage have been spectacular to behold. Al Gore reviled the petroleum industry for profits that “have gone up 500 percent” because of “Big Oil price-gouging,” and called for a federal antitrust investigation of oil companies. The Federal Trade Commission, meanwhile, has already launched one. Iowa Democratic Sen. Tom Harkin demanded oil executives be dragged like common criminals before Congress to explain their behavior.

Somewhere, I’m sure, a mob is heating tar and gathering feathers.

Prices of other vital commodities — strawberries, steel, houses, Microsoft shares — are known to fluctuate dramatically without anyone suspecting a vast conspiracy to rob the public. But the specter of Big Oil as a sinister, all-powerful force dies hard. People who are old enough not to worry about monsters under the bed somehow entertain the outlandish superstition that oil companies can enrich themselves at will.

The evidence critics offer lately is that prices have soared in recent weeks, and at one point went as high as $2.13 a gallon, on average, for unleaded regular in the Chicago area. Not only that, but as soon as the FTC got interested in looking for violations of the law, the prices dropped. The possibility that the interaction of supply and demand might account for these events is too simple for sophisticated politicians to accept. Something bad has happened, and a villain must be found.

But Big Oil is an implausible candidate. If the oil companies have the ability to boost prices and harvest obscene profits whenever they choose, why have they waited so long to do it? If oil prices had merely kept pace with inflation over the last two decades, they’d be nearly double what they currently are. For most of the last 20 years, oil companies have managed only mediocre profits.

Since 1977, the American Petroleum Institute sorrowfully notes, their earnings have averaged a 9.7 percent return, compared to 11.5 for U.S. industry as a whole. In recent years, while profits elsewhere have been rising, profits in petroleum have dropped. From 1994 through 1998, they were just half of the national average.

Gore’s alleged 500 percent increase sounds scandalous until you consider that he’s making a comparison with the first part of 1999 — when crude oil prices were at the lowest level, adjusted for inflation, since the Great Depression. Unfortunately for consumers, you can’t expect an after-Christmas sale to go on all year, every year. Oil-producing countries cut back their production in response to low returns, and prices rose from $10 to $34 a barrel. Only a dunce would be surprised that pump prices have also gone up.

Conspiracy theorists wonder why prices climbed to such heights in the Midwest, and they have no patience for tricky explanations involving new federal environmental rules, or state and local taxes, or pipeline breaks. But we might just as well ask why prices didn’t go so high elsewhere. If the oil companies are so adept and ruthless at gouging consumers, why is the rest of the country getting off easy?

And while we’re at it, what is “price-gouging” except charging the rate the market dictates? During periods when demand exceeds supply, the only way to restore the balance is higher prices. If service stations kept prices at last year’s levels, they would quickly exhaust their stocks, leaving motorists with no gasoline at any price. We tried that approach back in the ’70s, and it didn’t make most of us happy or prosperous.

If the free-market method of allocation means big bucks for producers at the moment, that’s only fair. After all, Congress doesn’t rush federal relief to oil companies during the bad times, when they’re capping wells and laying off workers. If oil companies can’t make money during periods of glut and aren’t allowed to make money during periods of shortage, when exactly are they supposed to make money? Unless the Salvation Army wants to take over the business and turn it into a charity, profits are needed at least once in a while.

Since Ronald Reagan deregulated the industry in 1981, the nation has enjoyed an era of cheap and abundant supplies, with only occasional exceptions. But we could always go back to the days when federal meddling in the petroleum market gave us both acute shortages and high prices. If you’ve forgotten what that was like, there are some politicians who could refresh your memory.

Bush’s foreign policy would be an education

Thursday, July 13, 2000

Parents sometimes gush a bit when talking about their kids, so you have to excuse former President George Bush if he gets carried away in making the case for his son, the presidential candidate. Asked if George W. knows as much about foreign affairs as he did when he arrived in the Oval Office, Dad replied, “No. But he knows every bit as much about it as Bill Clinton did.”

Swell. Eight years ago, Republicans warned the American people of the dangers of turning the leadership of the Free World over to a greenhorn governor who wouldn’t know Bahrain from Bolivia. Now they’re using Bill Clinton as a model.

As if that weren’t high enough praise, the senior Bush kept talking. In an interview last week with The New York Times, he was asked if his son knows as much about foreign affairs as Al Gore. “Gore’s had eight years of experience there,” Bush admitted. But never mind: “You get good people. George knows enough to do that . . .”

Message: Worse than Gore is today, but no worse than Clinton was eight years ago. Sound like a good campaign slogan?

Republicans who in 1992 stressed the critical importance of international expertise have developed a new tolerance for foreign-policy novices. Back then, they extolled Bush the Elder’s vast knowledge of the subject, based on his service as ambassador to the United Nations and China, his missions abroad as vice president and his prosecution of the Gulf War.

President Reagan made a campaign speech praising Bush as “a trustworthy and level-headed leader who is respected throughout the world.” And his opponents? “Foreign policy to Ross Perot and Bill Clinton is just that — foreign,” sniped Republican Party chairman Richard Bond. When Pat Buchanan appeared at the GOP convention to get behind the party nominee, he said Clinton’s foreign experience “is pretty much confined to having breakfast once at the International House of Pancakes.”

An unkind observer might point out today that George W. has international experience only in the sense that Texas, as the tourism slogan says, is “like a whole other country.” The father was on a first-name basis with every foreign leader who mattered. The son, when a radio talk show host quizzed him on who was in charge of several important foreign countries, couldn’t even come up with last names.

Being unschooled on international relations is not entirely his fault. Governors have little to do with such matters, beyond the occasional trade mission to urge foreigners to buy Idaho potatoes or Texas watermelons. They are obligated to focus on more parochial concerns, and they are wise to admit as much. When he was first running for president, Clinton made an unintentionally comic effort to enhance his foreign-policy credentials by reminding voters that he had served as commander-in-chief of the Arkansas National Guard.

But there was nothing to stop Bush from boning up on the subject on his own. And he does not inspire confidence when he suggests that his unfamiliarity with regions east of Kennebunkport isn’t important because he can always find smart people to tell him what he needs to know.

This brings to mind William Kristol, the neo-conservative policy intellectual who, after joining the staff of George Bush’s vice president, became known in Washington as Dan Quayle’s brain. Bush adviser Condoleeza Rice may be the best foreign-policy thinker any president could have. But it would be comforting to know she won’t be doing all the thinking.

The Clinton experience, contrary to Papa Bush’s suggestion, offers no grounds for optimism. Clinton’s foreign policy in his first term consisted mainly of taking promises he had made during the 1992 campaign and breaking them. He vowed to cut off normal trade relations with China, then embraced them. After criticizing Bush for failing to take tough military action in Bosnia, he shied away from doing it himself. He said Bush’s insistence on returning boat people to Haiti was “cruel” and “illegal,” which didn’t prevent him from doing the same thing.

What Clinton learned is that it’s a lot easier to formulate U.S. foreign policy at campaign rallies than in the Oval Office. International relations, unfortunately, is not one of those subjects you can master between Nov. 7 and Jan. 20, or even between the time you become a presidential candidate and the time you enter office. It takes years of study, thought and travel — an effort that Al Gore, for example, has made but Bush has not.

Eight years ago, Republicans warned us that it was risky to elect a president who would need on-the-job training in dealing with our foreign allies and enemies. It turned out they were right. So why do they want to run the experiment again?

Is this any way to choose a veep?

Thursday, July 27, 2000

Becoming president is not easy. George W. Bush is the prospective Republican nominee only because he persuaded a majority of GOP voters to choose him in the presidential primaries, and he will move into the White House only if he can induce about 45 million people to cast ballots for him in November. Becoming vice president is a bit easier. You don’t need 45 million votes. All you need is one.

Our political system has all sorts of mechanisms for fostering public control of government, plus various checks to prevent too much power from lodging in any single place. But nothing of the sort exists when it comes time to choose a vice president. The presidential nominee, acting strictly on his own, makes the choice, and everyone else has to live with it, for better or worse. It’s the most undemocratic feature of our constitutional framework.

Whoever ascends to the second highest office in the land has a reasonable chance of becoming president — as 14 presidents have done. In that case, the American people find themselves being governed by someone over whom they had no say.

Oh, sure, we have a sort of say in the matter when we go to the polls. If you don’t like the idea of Dick Cheney as president, you can vote against George W. Bush. But that’s like saying we have a voice in whom the president marries or how many kids he has. We could vote on that basis, but virtually no one does.

Same thing for running mates. Dan Quayle? Spiro Agnew? Henry Wallace? None of them could have been elected White House janitor in a truly democratic election. But placed on a national ticket, where voters essentially had no power to reject them, each ended up one heartbeat away from the presidency. Only in the most formal, meaningless way do voters have any control.

Quayle proves the case beyond all doubt. When George Bush chose him in 1988, Bush was trailing Michael Dukakis badly in the polls, and Quayle looked like the perfect way to turn a difficult race into an impossible one. He somehow managed the feat of starting out as a national joke and then declining in public esteem.

But come Election Day, Bush and Quayle won easily. And only one out of every 20 people who voted for Dukakis gave Quayle as the reason. In the end, one of the worst running mates ever was utterly irrelevant.

Ignoring the second name on the ticket is a sensible strategy for the informed citizen. Whoever is elected veep probably won’t become president — but whoever is elected president almost certainly will. So even if the presidential candidate you like makes a terrible choice and his opponent makes an excellent one, it would be crazy to let that decide your vote. The only smart course is to vote for the better of the two top nominees and gamble that the resulting vice president will never rise above that office.

But the result is that this critical decision is left entirely to one person. We’d never think of allowing that with other high offices. When a president chooses a Cabinet officer or Supreme Court justice, he has to get Senate approval. To become speaker of the House or president pro tem of the Senate — who are next in line behind the vice president to succeed to the presidency — you need to be elected by a majority of your colleagues, all of whom have been chosen by the people.

It’s easy to be complacent about this method of selection because no vice president has had to be sworn in as president on short notice since Gerald Ford ascended in 1974. So it’s easy to forget how quickly a nation that chose one person as its leader can be stuck with an entirely different one.

The problem is that there’s no obvious solution. We can’t very well have a separate election for vice president, since that might stick the president with a terribly incompatible partner. We can’t very well require vice presidential aspirants to go through primary elections. We can’t really make the vice president subject to Senate confirmation. The way we choose vice presidents is indefensible — but no obvious alternative presents itself.

We ought to be looking for one. In a democratic system, an office this important should be filled in a way that limits the power of any one person and lets the people be heard. The current system is accepted by the American people because it hasn’t produced any disasters lately. We might feel differently if we still had vivid memories of President Quayle.

Needle exchange protects children

Thursday, August 3, 2000

One of George W. Bush’s favorite slogans is “No child left behind,” and the Republican national convention has set aside many hours to catalog his devotion to kids. Al Gore has a slew of proposals on education and health care that, we are told, will greatly improve the lot of children. But when it comes to AIDS, the two candidates offer the youngest Americans a whole lot of nothing.

Every year, hundreds of infants are born infected with HIV. They get it from their mothers — most of whom got it by injecting drugs with contaminated syringes, or by having sex with an injecting drug user. So if you want to protect children from AIDS, you have to find a way to prevent transmission via hypodermic needles.

That’s not so hard to do. Amazingly enough, addicts don’t really prefer dirty syringes. They use them only because restrictive laws make clean ones hard to get or expensive. So in the early years of the epidemic, AIDS activists came up with an idea: Give drug users sterile needles in exchange for their old ones.

If you can’t stop people from injecting drugs, the thinking went, maybe you can at least stop them from getting and transmitting the virus. That would save not just their lives, but the lives of their sexual partners and their future offspring.

Hard-line drug warriors scoffed, insisting this approach would merely encourage illicit drug use while having no effect on the HIV infection rate. On both counts, they were wrong.

In one scientific study after another, needle exchange has proven its value. In 1997, the federal National Institutes of Health declared, “There is no longer any doubt that these programs work.” By its estimate, needle exchange can reduce HIV infections among drug users by 30 percent.

Health and Human Services Secretary Donna Shalala eventually reached the same conclusion. “A meticulous scientific review has now proven that needle exchange programs can reduce the transmission of HIV and save lives without losing ground in the battle against illegal drugs,” she said in 1998 — thus meeting the two conditions specified by law for federal funding of such efforts.

But the Clinton administration steadfastly refused to provide any funding. And the Republican Congress, to emphasize its disdain for needle exchange, passed a measure prohibiting the District of Columbia government from implementing this solution, even with non-federal money.

Obstinacy, however, hasn’t solved the problem. Nearly 60 percent of AIDS cases among women can be blamed on drug use or sex with a drug user. Each year, 300 to 400 infants emerge from the womb afflicted by this lethal virus. Many of these victims could have been spared by the simplest of preventives: a clean syringe.

The Republican convention gave a prominent speaking spot to Patricia Funderburk Ware, head of the Family Well-Being Foundation and an advocate of sex education that stresses abstinence. Ware said we need to “insure that not one more American, especially an innocent newborn baby, has to live with this awful disease.”

But she never said a word about dirty needles — which is like talking about obesity without mentioning food. There’s nothing wrong with promoting sexual abstinence among adolescents, but the infants with HIV didn’t get it because they were promiscuous. They got it because one of their parents used a syringe that was fatally contaminated with the virus.

So advocates of needle exchange shouldn’t expect anything from a Republican administration — or from a Democratic one. In this front of the AIDS war, President Clinton has been a conscientious objector, spending his entire term finding reasons not to act. Gore is somewhat more promising: He reportedly advised Clinton to lift the ban, and in a private meeting with two AIDS activists at the 1996 Democratic convention, he said he supported federal funding.

But Wayne Turner, a spokesman for ACT UP Washington, one of those who met with the vice president, dismisses that statement. “Please emphasize that I don’t believe him,” he says. “I have absolutely no faith in this administration, including Gore.”

Selling needle exchange to the American people as the best way to protect infants from AIDS would not be that hard, since that’s exactly what it is. Besides stemming the epidemic without fostering drug use, these programs make perfect fiscal sense. A new syringe costs less than 8 cents. Treating a patient with AIDS costs about $150,000.

You would think one of the two candidates would embrace needle exchange simply because it would save the lives of blameless children. But neither has, and neither is about to.

So maybe they could agree on a joint slogan: Some children left to die.

The unsung heroes in land-mine fight

Sunday, September 10, 2000

When President Clinton refused to sign the 1997 international treaty banning land mines, critics portrayed him as Lucifer’s evil twin. They said the United States was undermining an irreproachable humanitarian cause, exhibiting “arrogance,” and becoming “part of the problem.” After winning the Nobel Peace Prize for her efforts on behalf of the Ottawa accord, Jody Williams of the International Campaign to Ban Landmines lamented, “I think it’s tragic that President Clinton does not want to be on the side of humanity.”

Three years later, judging from a new report by the same organization, the anti-mine campaign has done much to eliminate the threat of these hidden menaces, which kill and maim thousands of people every year. “Great strides have been made in nearly all aspects of eradicating the weapon,” says the study. And you’ll never guess which country deserves a large share of the credit: the United States.

Steven Goose, program director of Human Rights Watch, one of the contributors to the report, says the U.S. is “schizophrenic on this issue. The United States is spending tens of millions of dollars, more than any other country, to assist others with the removal of mines that are already in the ground, and yet the U.S. continues to insist on the right to use mines.”

But there is nothing contradictory about the administration’s position. It refused to go along with the agreement because American mines were not the ones harming innocent people around the world. By demanding that the U.S. relinquish an important weapon immediately, supporters of the treaty were attacking a purely fictional threat. The administration, by leading the charge to get rid of deadly mines already in the ground, chose instead to address a real problem. Better yet, the effort has produced genuine improvement.

The International Campaign to Ban Landmines continues to fault Clinton for not caving in to international pressure, pointing out that 137 countries have signed the treaty, leaving the U.S. in the unsavory company of Cuba, China, Afghanistan, Iraq and Somalia. But it’s clear that Washington’s refusal has been no hindrance in the drive to reduce the carnage.

“In recent years,” notes the report, “it appears that use of anti-personnel mines is on the wane globally, production has dropped dramatically, trade has halted almost completely, stockpiles are being rapidly destroyed, (and) funding for mine action programs is on the rise, while the number of mine casualties in some of the most affected states has fallen greatly.”

On the downside, mines have been used extensively in Chechnya and Kosovo by Russian and Yugoslavian forces. Several governments have deployed the weapons, including Angola, Burundi and Sudan, as have many insurgent armies.

Neither the good nor the bad of the last three years has been affected in the slightest by our decision not to sign. Angola and Burundi aren’t planting mines because of Bill Clinton’s position. And the U.S. never objected to curbing the manufacture, trade and use of mines that can go on killing indiscriminately for years after they’ve served their military purpose.

We balked at joining the agreement only because we weren’t willing to get rid of the mines placed along the 38th parallel to slow a North Korean invasion of South Korea — mines located in areas closed to noncombatants. We also saw no point in forcing our military to abandon “smart” mines that automatically deactivate within hours or days of being laid.

Children in Cambodia and Mozambique weren’t being blown up by U.S. Army mines, and neither were civilians in other places. American mines, in reality, were responsible for none of the problems that the land-mine treaty aimed at eliminating. But the accord pretended that our mines were a dire threat.

The Clinton administration realized where the real problem lay. So it spurned doing something meaningless but dangerous in favor of doing something tangible and extremely useful: getting rid of the mines that actually pose a danger.

Since 1993, the U.S. has devoted more than $400 million to what is known as “humanitarian de-mining assistance” in 37 different countries, and we’re planning to spend another $110 million this year. As Goose acknowledges, that’s more than any other country has contributed.

Our program is one big reason that 22 million anti-personnel mines have been destroyed around the world. It’s also a big reason that the toll in death and disability from these weapons is falling every year.

The president who was not “on the side of humanity,” it turns out, has been an indispensable ally in the effort to rid the world of the danger posed by land mines. He might drop a note to Jody Williams with a brief message: You’re welcome.

Gore and the surplus: Will he spend it all?

Thursday, September 21, 2000

Remember that pony you asked Santa Claus to bring you when you were 7 years old? You were disappointed then, but if you’re still interested, write a letter to Al Gore. A pony for all the people who never got one is about the only thing he hasn’t promised yet.

Bill Clinton has made his mark by undertakings now universally known as “Clintonesque” — unassailable, small-bore programs that made for great applause lines but cost so little that even the stingiest congressional Republicans could hardly object. Clinton, however, governed during a period of budget deficits. Gore expects a large and growing surplus, and he knows just what to do with it: Spend.

In days of old — say, 1998 — every big spending proposal was met with the vexing question of how to pay for it. Raise taxes? Run an even bigger deficit? Cut outlays elsewhere in the budget? None of these options was terribly attractive, so spending growth was contained, if not reversed.

But surpluses give the alluring impression that new programs don’t actually have to be paid for. Taxes won’t rise, other expenditures won’t have to be cut, and there is no deficit to enlarge. Our new buddy Mr. Surplus will take care of it, with no pain or effort on our part. Gore doesn’t even refer to his plans as spending money. He calls it “using our prosperity.”

And use it he does. According to the non-partisan Committee for a Responsible Federal Budget, the spending plans Gore has proposed so far would expand the federal budget by $2.3 trillion over the next 10 years. Gore has promised to preserve the share of the surplus attributable to Social Security. But by CRFB’s calculations, his policies would exhaust not only the entire projected non-Social Security surplus, but part of the Social Security surplus as well. (Bush would do the same thing, says the group, but mostly through tax cuts).

Keeping up with Gore’s cascade of goodies is like drinking from a fire hose. In August, the National Taxpayers Union Foundation published a study toting up the cost of everything promised so far by the two major candidates. Two weeks later, it had to issue an update because Bush had piled another $33 billion on top of his original plans, while Gore had gone even further, adding $39 billion.

Even these alarming numbers may understate what his ambitions will cost. Gore’s Medicare prescription drug plan is supposed to soak up $340 billion over 10 years. But one lesson of budget history, says Carol Cox Wait of the Committee for a Responsible Federal Budget is this: “We almost always underestimate the cost of new health care entitlements — and not by a little, but often by 50 or 100 percent.”

There is even more potential for blood-curdling shocks in Gore’s “Retirement Security Plus.” It would obligate the federal government to “match” private retirement savings, with Washington kicking in $3 for every $1 saved by low-income households, $1 for each $1 saved by middle-income families, and $1 for every $3 saved by high-income taxpayers.

Hoover Institution economist John Cogan told The Wall Street Journal that if everyone eligible saved the maximum covered by the program, this program would cost a staggering $160 billion a year. Even if participation rates were no higher than those of people in private pension plans (about 75 percent), it would add $120 billion a year to the federal budget — not the $35 billion claimed by the vice president.

All this means that the non-Social Security surplus — and perhaps more — will vanish into the federal maw. That, of course, assumes the surplus will even be there to squander. Budget projections are notoriously unreliable, because unforeseen events can have drastic effects.

Most of the surplus, moreover, is nothing more than a tantalizing possibility. Robert Bixby of the bipartisan Concord Coalition, which advocates fiscal restraint, notes that about 70 percent of the projected 10-year surplus doesn’t appear until the last five years — too far off to be the basis of permanent spending commitments. All it would take to obliterate the budget cushion is a serious recession, which is a real possibility in the next decade.

By then, taxpayers could be on the hook for a raft of new obligations that will demand funding regardless of the state of the economy — and the deficit that took so long to overcome will be back. The presidential nominees act as though the budget surplus is as permanent as the Appalachian Mountains. The way things are going, it will likely resemble the country singer George Jones’ idea of romantic commitment: for better or for worse, but not for long.

For women, a new millennium but old burdens

Women want to live their lives without being dehumanized by those who hold power over them

Sunday, September 24, 2000

Human life is everywhere a state in which there is much to be endured and little to be enjoyed, according to Samuel Johnson. If Johnson had been a female, the observation might have continued: And that goes double for women.

With the collapse of communism and other forms of dictatorship, the last decade and a half has been a period of rapid progress in respect for human rights. But a more intractable tyranny still weighs on that part of the human race that is shortchanged, abused and oppressed merely for being female. This burden isn’t news, but it deserves the attention it gets in a recent report, “Lives Together, Worlds Apart,” published by the United Nations Population Fund.

The scope of the problem is so vast as to defy comprehension, but UNFPA offers some arresting statistics. About 585 million adult females can’t read or write, partly because girls are less likely to be sent to school than boys. Worldwide, the illiteracy rate is nearly twice as high for women as for men.

Some 130 million women and girls, mostly in Africa and the Middle East, have been victims of genital mutilation — horrific enough in itself, but also “nearly always carried out in unsanitary conditions, without anesthetic,” and sometimes resulting in “severe infection, shock, or even death.”

In Africa, there are 2 million more females infected with the AIDS virus than males. One explanation is sexual coercion. “Two million girls between the ages of 5 and 15,” says UNFPA, “are introduced into the commercial sex market each year.” The abuse affects girls even younger than that. A study in Nigeria found that one out of every six patients with sexually transmitted diseases was younger than 5.

A UNICEF study earlier this year estimated that around the world, between 20 and 50 percent of women have been physically abused by an intimate partner or family member. Such treatment is so routine in many places as to be endorsed even by the victims. In Ghana, one survey found, nearly half of all women think a man is justified in beating his wife if she uses contraception without his consent.

A lot of females don’t live long enough to encounter such problems. In places like India and China, abortion is often used to dispose of unwanted girls before they are even born. As infants, girls are disproportionately represented among the victims of infanticide — an antiseptic term for murdering a baby. Those who are allowed to live may succumb later because they are more likely than boys to get inadequate nutrition or medical care.

One consequence of all these phenomena is what Nobel Prize-winning Indian economist Amartya Sen refers to as “missing women” — females who, given normal demographic factors,

ought to exist but don’t. He estimates the total number of females who have “disappeared” at between 60 million and 100 million.

It’s hard to lift yourself up if you’re busy holding someone else down, and discrimination against females turns out to be bad for everyone. Besides serving as a drag on economic development, it can amount to a death sentence for children of both sexes. In Kenya, for example, 10.9 percent of children born to uneducated women die before their fifth birthday, compared to 7.2 percent of children whose mothers finished elementary school.

Educated women are more likely to use contraception, reducing population growth. Education also promotes democracy and individual rights, expanding opportunities for males as well as females. In fact, the democratization of East Asia has been led by countries like South Korea and Taiwan, which have long stressed the importance of education for all.

Although the report doesn’t say so, the wave of liberalization that has washed over much of the planet in recent years has improved the lot of women and will continue to do so. Human rights — the right to speak without fear of repression, vote in free elections, read and hear dissenting views, and live under the rule of law — are just as much women’s rights as men’s.

UNFPA’s emphasis is on what governments can do, but in some spheres the best thing they can do is get out of the way. Free markets foster personal freedom and economic development — raising living standards and giving every individual broader choices and greater independence. The elimination of barriers to international trade and investment exposes oppressive cultures to new ideas, which help dissolve the fetters binding women.

What women want is no different from what men want — the right to live their lives as they see fit, without being mistreated or ordered around by those who unjustly hold power over them. It’s not so much to ask, but in most of the world, it’s a long time coming.

The debate left plenty of questions unanswered

Let’s start with abortion, military missions, the oil reserve, Cuba, bureaucrats . . .

Thursday, October 5, 2000

Questions someone really ought to ask at the next presidential debate:

For George W. Bush: You’ve been described as having a thinner resume than anyone elected president since Woodrow Wilson. How can someone with an undistinguished career in business and so little experience in government be adequately prepared for the most important job in the world? Would anyone consider you qualified for the presidency if your name were George Walker rather than George Walker Bush?

For Al Gore: Over the years, you have changed your positions on gun control, abortion, tobacco taxes and a comprehensive nuclear test ban. How can the American people be confident that the positions you have taken during this campaign would bear any resemblance to the ones you would implement as president?

For George W. Bush: You’ve said that the United States should intervene with military force only when our territory is threatened, our people could be harmed or our friends and allies are threatened. Those conditions were not present when your father sent 28,000 troops to Somalia in 1992. Was that mission a mistake?

For Al Gore: You proposed tapping the Strategic Petroleum Reserve because the cost of gasoline has become “much more of a burden on the family’s budget.” What is the appropriate amount for a family to spend on gasoline, and should the federal government guarantee that no family has to exceed that amount? You’ve said no one should have to choose between buying food and buying prescription drugs. Should anyone be forced to choose between buying food and buying gasoline?

For George W. Bush: You say the way to address high oil prices is to reduce oil imports by increasing production in the United States, including the Arctic National Wildlife Refuge. How do you explain that between 1981 and 1999, prices fell steadily even though imports into the United States more than doubled? Since oil prices are set in a world market, won’t Americans pay the same price regardless of whether we import 100 percent of our oil or zero percent?

For Al Gore: You’ve consistently described the last recession as the worst since the Great Depression. How can that be true when the unemployment rate peaked at 7.8 percent, compared with 10.8 percent in the previous recession? You’ve said the current administration turned that recession into the greatest expansion in American history. How can you claim credit for the turnaround when, according to the National Bureau of Economic Research, the recession ended in March 1991?

For George W. Bush: Both you and your running mate have business experience, as well as many campaign contributors, in the oil industry, and both of you, in the past, have suggested that low oil prices are not a good thing. Should the federal government put a floor on how low oil prices can go? Why should the American people expect two former oil executives to be ready to make decisions that would advance the public interest at the expense of the oil industry?

For Al Gore and George W. Bush: You have both endorsed expanded commercial ties and a normal trade relationship with China as a way of promoting the development of free markets, democracy and human rights in a communist country. Why doesn’t that formula apply to Cuba?

For Al Gore: You’ve said this administration has turned record budget deficits into record surpluses. Why did this administration decline to offer a plan to balance the budget until after Republicans gained control of Congress and insisted on it?

For George W. Bush: You have proposed $84 billion a year in new federal spending, and you are the first Republican presidential nominee in decades not to propose cutting or abolishing any major federal spending program. Is there any federal agency you would close down or any spending program you would cut by half or more? You say the vice president’s spending plans would need 20,000 new bureaucrats to administer them all. How many new bureaucrats would yours require?

For Al Gore: In the first debate, you said the federal government needs to provide more aid to local schools, citing a high school girl in Sarasota, Fla., who had to stand for her science class because it was so overcrowded. How do you respond to the principal of that school, who says your account was “misleading at best,” since the classroom has $150,000 in new equipment and plenty of lab stools where the girl could have sat? Why is it that so many of the claims you’ve made during the campaign don’t stand up to factual scrutiny?

For the American people: Do we really want to know the answers?

Looking for leadership on the drug war

Sunday, November 5, 2000

Here’s our quiz for today: Was it Al Gore or George W. Bush who said, “On my first day in office, I will pardon everyone who has been convicted of a non-violent federal drug offense. I will empty the federal prisons of the marijuana smokers and make room for the truly violent criminals who are terrorizing our citizens”?

No, it wasn’t Gore or Bush. It was another presidential candidate — Harry Browne of the Libertarian Party. Browne, who favors free markets, limited government, and deep tax cuts, has virtually nothing in common with Green Party nominee Ralph Nader, who thinks the only thing better than big government is giant government. But the two do converge on the drug issue.

Nader has come out in favor of legalizing marijuana and drastically changing policy on other drugs: “Addiction should never be treated as a crime. It has to be treated as a health problem. We do not send alcoholics to jail in this country. We do not send nicotine users to jail in this country. More than 500,000 people are in our jails who are non-violent drug users.”

It takes outsiders like these to state the obvious. The American war on drugs has been going on for more than two decades, and not only is victory nowhere in sight, but no one really expects it will ever be won. It has been called our domestic Vietnam — long, costly and unsuccessful. The difference is that in Vietnam, we eventually acknowledged the futility of our efforts.

The two major party nominees ought to be able to see through the myths of drug prohibition. Gore has acknowledged smoking cannabis in his younger days, and Bush has been careful not to deny ever using illicit substances.

But instead of drawing the logical conclusion from their experience — that drug offenders who are not arrested and incarcerated generally go on to lead responsible, productive lives — they insist on enforcing laws that, due to good luck, were never applied to them. All we can expect of a Bush or Gore administration is to identify what’s failed in the past and do twice as much of it.

This is a tried and true approach. Since 1980, notes Ethan Nadelmann, executive director of the Lindesmith Center-Drug Policy Foundation in New York, federal spending on anti-drug efforts has risen from $1 billion to $18 billion, and state spending has followed the same upward trajectory. The number of people in state prisons for drug offenses has climbed more than tenfold. Nearly 60 percent of all inmates in federal prison are there on drug charges.

To a large extent, law enforcement in America is just busting crackheads and pot peddlers. Last year, the number of people arrested for marijuana offenses exceeded 700,000, the highest in American history — and 88 percent of the arrests were for simple possession, not trafficking.

We now arrest more people for marijuana offenses than for all violent crimes combined. We incarcerate more people for drug crimes than the countries of the European Union incarcerate for all crimes.

The people most likely to get caught in the dragnet are not people resembling the young George W. Bush and Al Gore. The National Organization for the Reform of Marijuana Laws says that blacks and whites use pot at roughly the same rate. But blacks are more than twice as likely to be arrested for marijuana possession as whites.

What have we gotten for all this, except lots of jobs for correctional officers? Not much. The White House itself admits that illegal drugs are cheaper now than they were in 1980. Amid the barrage of anti-drug messages, illicit drug use among high school students and young adults has risen, not fallen. Meanwhile, treatment programs of proven effectiveness go begging for money.

Despite the rigidity of their leaders, the American people seem open to a different approach. Measures to legalize the medical use of marijuana horrify White House drug czar Barry McCaffrey, but they have been approved by voters in seven states and the District of Columbia.

Californians are about to vote on a ballot initiative that requires probation and treatment, not jail, for those convicted of drug possession — a change that would spare at least 25,000 people a year from going to prison and save California taxpayers $1.5 billion over the next five years. The measure is leading in the polls. Arizonans approved a similar measure in 1996. Alaskans may go even further: They’ll vote Tuesday on whether to legalize marijuana outright.

On this issue, change will have to come from the bottom, because it’s not coming from the top. The drug war has been a costly, destructive failure for 20 years. With a President Gore or Bush, you can make that 24.

Bush and Gore are neck-and-neck in hypocrisy

Sunday, December 3, 2000

To become president of the United States, says the Constitution, a person has to be at least 35 years old and a “natural born citizen.” Conniving, dissembling, opportunistic snakes are not disqualified, which is a good thing for George W. Bush and Al Gore.

Not that either side is incapable of honesty. Both Democrats and Republicans accuse their opponents of trying to steal the election, and both are right. Each side is striving to prevent the other from winning through unfair and unscrupulous means, so that it can win through unfair and unscrupulous means.

The warring parties bring to mind the 17th-Century Puritans, who allegedly came to America in search of religious freedom. Actually, they wanted religious freedom only for themselves, not for anyone else. Gore and Bush each want to exploit the various types of electoral unfairness to his own advantage, not wipe it out.

What partisans portray as a battle over principle looks more like the Super Bowl of hypocrisy. Bush has argued against hand recounts, and particularly against including “dimpled” chads. Yet he signed a law in Texas authorizing those very practices. Letting human beings inspect disputed ballots one by one is dangerously subjective in Florida, but indispensable to honest elections in the Lone Star State.

Gore claims that all he wants is a fair count of all the votes cast in Florida. But he hasn’t stopped a local Democrat from suing to exclude absentee ballots in Seminole County — which conveniently would deprive Bush of several thousand votes. The vice president’s passion for inclusiveness is also absent when it comes to absentee ballots from military personnel stationed abroad: Hundreds of them have been rejected because they lack postmarks. It’s Bush’s attorneys, not Gore’s, who went to court insisting that those votes be included.

Republicans say state law gives counties just seven days to report their vote totals, and that the limit must be enforced even if some counties need more time for recounts. The extension ordered by the Florida Supreme Court, they say, amounts to a flagrant rewriting of the law. At the same time, Bush and Co. think it would be horribly unjust for election boards to actually abide by that law requiring postmarks — because it means some military votes (which tend to go Republican) won’t be counted.

Should we enforce the law as written, or should we make adjustments to get a better measure of the will of the people? Bush and Gore have a clear, coherent answer: Enforce the law when it will help me, and don’t when it won’t.

Democrats were pleased when Secretary of State Katherine Harris was told she couldn’t enforce the seven-day rule but had to abide by the Nov. 26 deadline established by the Florida Supreme Court. But when Palm Beach County election officials took Thanksgiving off and then wanted yet another extension, Democrats thought Harris should disregard the timetable established by the court and grant extra time entirely on her own.

Republicans have taken pride in the near-riot by Bush supporters — “newly assertive Republicans,” in the admiring words of conservative writer Peggy Noonan — at the Miami-Dade County Board offices, which helped induce the board to abandon a recount that would have helped Gore. If a raucous protest led by Jesse Jackson had intimidated election officials in a GOP stronghold, do you think Noonan would be praising the demonstrators’ assertiveness?

Democrats are fond of resolving disputes by turning to the federal government, which they trust more than state and local bodies. But it’s Gore taking the position that the U.S. Supreme Court should stay out of this squabble because it’s the rightful province of the state of Florida. Bush, whose party is usually the champion of state sovereignty, wants the Supreme Court to rule that Florida’s state courts can’t be trusted to interpret their own laws and need benevolent guidance from Washington.

Gore says it’s critical that every vote be counted. But from the start, his real concern has been on getting recounts only in counties where he might gain votes — taking advantage of Bush’s failure to ask for recounts within 72 hours after the election, the time limit set by law.

Early on, the two candidates could have asked Katherine Harris to authorize a statewide recount to find out who really got the most votes, and the chances are good she would have agreed. But neither was much interested in that option. Each could think of an option that would be better — better for himself, that is.

When Election Day arrived, the country was divided more or less equally between those who disliked Gore and those who disliked Bush. Before long, Americans may be united in detesting them both.

You talkin’ to me?

Clinton and Gore sort out the blame

Sunday, February 11, 2001

The Washington Post reports that after the presidential election was resolved, Vice President Gore and President Clinton had a one-on-one meeting at the White House in which, using ‘uncommonly blunt language,’ Gore blamed his defeat on Clinton’s sex scandal, and Clinton faulted Gore for failing to run on the administration’s record. Below is an unconfirmed transcript of what could have been said.

President Clinton: Good to see you, Al. Just let me finish signing these pardons, and I’ll be right with you. Let’s see: Charles Manson — check. Timothy McVeigh — check. Puffy Combs — I just can’t say no to Jennifer Lopez. Mark Chmura — you bet. Now, what did you want to talk to me about?

Vice President Gore: I suppose I should begin by thanking you for giving me the opportunity to be the most influential vice president in American history.

Clinton: Isn’t that like being the best dancer in Salt Lake City?

Gore: Ha, ha, Mr. President. I see what you mean. It’s not a terribly meaningful distinction because the standard of comparison is so low. Sort of like being the most honest member of the Clinton family.

Clinton: That would be Chelsea. But give her time. Say, would you like a bag of White House silverware? There’s a couple dozen of them in the closet. I can’t take them all.

Gore: No, sir, I won’t be needing any silverware.

Clinton: Really? Hmm. Maybe I can take them all.

Gore: You really should, sir. The American people know that you and the first lady have made enormous financial sacrifices to serve the public. If you didn’t take it, I think the public would rise up as one and insist that you accept some token of their gratitude. The idea of a freshman incoming senator not having place settings for 300 — it’s too much to bear. Really, don’t skimp. I know there are some people who say you and Hillary would steal a hot stove if it weren’t bolted down, but I never believed that.

Clinton: Depends on how hot, I guess. Now, where were we? Oh, yeah — you were thanking me. Well, you’ve been an excellent vice president, and if I had another eight years, I might be able to teach you how to win a presidential election, too. Say, have you ever thought of taking up the saxophone? Maybe it would loosen up your image.

Gore: You may not have noticed that I got the most votes in the election. Got more than you did either time you ran, now that you mention it. But it’s not easy to get elected to the presidency when people identify you with a partner who’s held in contempt by millions of Americans.

Clinton: Oh, I know Tipper isn’t popular enough to get elected to the Senate. But I don’t think she’s generally held in contempt.

Gore: Not Tipper, Mr. President. You with your White House intern. Do I look like I’ve lost a lot of weight? Because this is the first time in the last year that I haven’t had to carry Monica Lewinsky on my back.

Clinton: Gee, that’s strange. The more Ken Starr and Newt Gingrich talked about Monica Lewinsky, the higher my approval ratings went. Americans may talk like puritans, but trust me — they like a little entertainment in the White House. Heck, if W’s daddy had arranged a little sex scandal for himself, I might be teaching law back in Fayetteville right now. Which reminds me: where did I put Gennifer’s phone number? The nice thing about leaving office is that I can start dating again.

Gore: Thanks for letting me know, sir. I’ll lock up my daughters. Clinton: Very funny, Al. It’s that sharp sense of humor that’s always made you so popular with the American people. You know, the ones who elected that frat boy, even though he couldn’t find his way out of an elevator if you gave him a map. Gore: Well, he’s smart enough to keep his pants zipped. Or maybe he just does his thinking with the right organ. Did I mention I got the most votes? I can’t help it if those Republicans down in Florida are so good at stealing elections. But if it weren’t for being dragged down by you, I’d have beaten Bush like a rented mule.

Clinton: Hey, if I’m an albatross, the Chicago Bulls are a basketball team. Aren’t you the guy who lost three debates to an empty chair?

Gore: I suppose I did make my share of mistakes. But I don’t want to leave on a bad note. I’ve learned a lot from you, and it will come in handy if I’m ever investigated by a special prosecutor.

Clinton: No need to thank me. I’ve enjoyed our association, and when the next presidential election rolls around, I’m prepared to do everything I can to help you.

Gore: You mean that, sir?

Clinton: Absolutely! You’re honest, you’re experienced, and you’ve been part of a phenomenally successful administration. Where could Hillary find a better running mate?

Ode to an unpayable debt

Thursday, March 1, 2001

When George F. Will arrives at Judgment Day, he will not be surprised to hear most of the grave faults submitted by his detractors: his unbending conservatism, his merciless wit, his Oxford manners and his association with Sam Donaldson. It may come as news to him to discover yet another alleged sin on the list — that in the mid-1970s, he inspired an impressionable college student to squander his life scribbling opinions.

I know, because I was that student. Back then, anyone who was not left of center found the climate on a college campus as oppressive as the Everglades in August. But one day I opened a copy of The Washington Post to the op-ed page and suddenly felt a blast of cool air. I had discovered George Will, and there is no shame in saying I would never be quite the same.

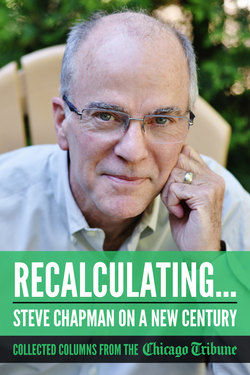

Anniversaries are an occasion for acknowledging debts, especially those that are impossible to repay, and today is an important anniversary for me. Twenty years ago, in an event generally overlooked outside the Chapman household, I wrote my first newspaper column, for the Chicago Tribune. Since then, I’ve written more than 2,000, and I blame them all on Will, whose work gave me the idea, still unproven, that writing commentary might be a useful vocation.

At the start, I read his columns because he was a conservative and so was I. But I kept reading because he was also an exceptional writer and thinker. With his densely satisfying style, his flair for applying philosophical principles to political issues, his allusions to history, his unfamiliar quotations, and his often self-mocking sense of humor, Will performed a kind of alchemy — turning daily journalism into literature.

These days, the way to fame as a commentator is to become a pundit on TV, where volume can drown out logic and the worst vice is failing to be utterly predictable. Will is the rare columnist to gain fame on the strength of his prose and the force of his reasoning, which advance a coherent ideology without being harnessed to any partisan cause. This, after all, is the same guy who celebrated Ronald Reagan’s rise to power, only to spend the next eight years arguing that, contrary to Reagan’s insistence, Americans were undertaxed.

Beginning a Will column, or even a Will sentence, is like sitting down to a meal at a five-star restaurant: You know there are surprises ahead, and you know they will be pleasant. He is probably the only Ph.D. in political science who has applied the tools of that discipline to explain why it’s morally praiseworthy to steal coat hangers from hotel rooms.